It seems like we can’t go a day without seeing “artificial intelligence” in the news, and this past week was no exception in no small part thanks to the Snapdragon Tech Summit. Every year, Qualcomm unveils the plethora of improvements it brings to its Hexagon DSP and the Qualcomm AI Engine, a term they use for their entire heterogeneous compute platform – CPU, GPU, and DSP – when talking about AI workloads. A few years ago, Qualcomm’s insistence on moving the conversation away from traditional talking points, such as year-on-year CPU performance improvements, seemed a bit odd. Yet in 2019 and with the Snapdragon 865, we see that heterogeneous computing is indeed at the helm of their mobile computing push, as AI and hardware-accelerated workloads seem to sneak their way into a breadth of use cases and applications, from social media to everyday services.

The Snapdragon 865 is bringing Qualcomm’s 5th generation AI engine, and with it come juicy improvements in performance and power efficiency — but that’s to be expected. In a sea of specifications, performance figures, fancy engineering terms, and tiresome marketing buzzwords, it’s easy to lose sight of what these improvements actually mean. What do they describe? Why are these upgrades so meaningful to those implementing AI in their apps today, and perhaps more importantly, to those looking to do so in the future?

In this article, we’ll take an approachable yet thorough tour of the Qualcomm AI Engine combing through its history, its components and the Snapdragon 865’s upgrades, and most importantly, why or how each of these have contributed to today’s smartphone experience, from funny filters to digital assistants.

The Hexagon DSP and Qualcomm AI Engine: When branding makes a difference

While I wasn’t able to attend this week’s Snapdragon Tech Summit, I have nonetheless attended every other one since 2015. If you recall, that was the year of the hot mess that was the Snapdragon 810, and so journalists at that Chelsea loft in New York City were eager to find out how the Snapdragon 820 would redeem the company. And it was a great chipset, alright: It promised healthy performance improvements (with none of the throttling) by going back to the then-tried-and-true custom cores Qualcomm was known for. Yet I also remember a very subtle announcement that, in retrospect, ought to have received more attention: the second generation Hexagon 680 DSP and its single instruction, multiple data (SIMD) Hexagon Vector eXtensions, or HVX. Perhaps if engineers hadn’t named the feature, it would have received the attention it deserved.

This coprocessor allows the scalar DSP unit’s hardware threads to access HVX “contexts” (register files) for wide vector processing capabilities. It enabled the offloading of significant compute workloads from the power-hungry CPU or GPU to the power-efficient DSP so that imaging and computer vision tasks would run at substantially improved performance per milliwatt. They are perfect for applying identical operations on contiguous vector elements (originally just integers), making them a good fit for computer vision workloads. We’ve written an in-depth article on the DSP and HVX in the past, noting that the HVX architecture lends itself well to parallelization and, obviously, processing large input vectors. At the time, Qualcomm promoted both the DSP and HVX almost exclusively by describing the improvements they would bring to computer vision workloads such as the Harris corner detector and other sliding window methods.

It wasn’t until the advent of deep learning in consumer mobile applications that the DSP, its vector processing units (and now, a tensor accelerator) would get married to AI and neural networks, in particular. But looking back, it makes perfect sense: The digital signal processor (DSP) architecture, originally designed for handling digitized real-world or analog signal inputs, lends itself to many of the same workloads as many machine learning algorithms and neural networks. For example, DSPs are tailored for filter kernels, convolution and correlation operations, 8-bit calculations, a ton of linear algebra (vector and matrix products) and multiply-accumulate (MAC) operations, all most efficient when parallelized. A neural network’s runtime is also highly dependent on multiplying large vectors, matrices and/or tensors, so it’s only natural that the DSP’s performance advantages neatly translate to neural network architectures as well. We will revisit this topic in short!

In subsequent years, Qualcomm continued to emphasize that they offer not just chipsets, but mobile platforms, and that they focus not just on improving particular components, but delivering “heterogeneous” compute. In 2017, they released their Snapdragon Neural Processing Engine SDK (for runtime acceleration) on the Qualcomm Developer Network, and in early 2018 they announced the Qualcomm Artificial Intelligence Engine to consolidate their several AI-capable hardware (CPU, GPU, DSP) and software components under a single name. With this useful nomenclature, they were able to neatly advertise their AI performance improvements on both the Snapdragon 855 and Snapdragon 865, being able to comfortably spell out the number of trillions of operations per second (TOPS) and year-on-year percentage improvements. Harnessing the generational improvements in CPU, GPU, and DSP – all of which see their own AI-focused upgrades – the company is able to post impressive benchmarks against competitors, which we’ll go over shortly. With the company’s recent marketing efforts and unified, consistent messaging on heterogeneous computing, their AI branding is finally gaining traction among journalists and tech enthusiasts.

Demystifying Neural Networks: A mundane pile of linear algebra

To disentangle a lot of jargon we’ll come across later in the article, we need a short primer on what a neural network is and what you need to make it faster. I want to very briefly go over some of the mathematical underpinnings of neural networks, avoiding as much jargon and notation as possible. The purpose of this section is simply to identify what a neural network is doing, fundamentally: the arithmetic operations it executes, rather than the theoretical basis that justifies said operations (that is far more complicated!). Feel free to proceed to the next section if you want to jump straight to the Qualcomm AI Engine upgrades.

“Vector math is the foundation of deep learning.” – Travis Lanier, Senior Director of Product Management at Qualcomm at the 2017 Snapdragon Tech Summit

Below you will find a very typical feedforward fully-connected neural network diagram. In reality, the diagram makes the whole process look a bit more complicated than it is (at least, until you get used to it). We will compute a forward pass, which is ultimately what a network is doing whenever it produces an inference, a term we’ll encounter later in the article as well. At the moment, we will only concern ourselves with the machine and its parts, with brief explanations of each component.

A neural network consists of sequential layers, each comprised of several “neurons” (depicted as circles in the diagram) connected by weights (depicted as lines in the diagram). In general terms, there are three kinds of layers: the input layer, which takes the raw input; hidden layers, which compute mathematical operations from the previous layer, and the output layer, which provides the final predictions. In this case, we have only one hidden layer, with three hidden units. The input consists of a vector, array, or list of numbers of a particular dimension or length. In the example, we will have a two-dimensional input, let’s say [1.0, -1.0]. Here, the output of the network consists of a scalar or single number (not a list). Each hidden unit is associated with a set of weights and a bias term, shown alongside and below each node. To calculate the weighted sum output of a unit, each weight is multiplied with each corresponding input, and then the products are added together. Then, we will simply add the bias term to that sum of products, resulting in the output of the neuron. For example, with our input of [1.0,-1.0], the first hidden unit will have an output of 1.0*0.3 + (-1.0) * 0.2 + 1.0 = 1.1. Simple, right?

The next step in the diagram represents an activation function, and is what will allow us to produce the output vector of each hidden layer. In our case, we will be using the very popular and extremely simple rectified linear unit or ReLU, which will take an input number and output either (i) zero, if that number is negative or zero (ii) the input number itself, if the number is positive. For example, ReLU(-0.1) = 0, but ReLU(0.1) = 0.1. Following the example of our input as it propagates through that first hidden unit, the output of 1.1 that we computed would be passed into the activation function, yielding ReLU(1.1)=1.1. The output layer, in this example, will function just like a hidden unit: it will multiply the hidden units’ outputs against its weights, and then add its bias term of 0.2. The last activation function, the step function, will turn positive inputs into 1 and negative values into 0. Knowing how each of the operations in the network operates, we can write down the complete computation of our inference as follows:

That is all there is to our feedforward neural network computation. As you can see, the operations consist almost entirely of products and sums of numbers. Our activation function ReLU(x) can be implemented very easily as well, for example by simply calling max(x,0), such that it returns x whenever the input is greater than 0, but otherwise it returns 0. Note that step(x) can be computed similarly. Many more complicated activation functions exist, such as the sigmoidal function or the hyperbolic tangent, involving different internal computations and better-suited for different purposes. Another thing you can already begin noticing is that we also can run the three hidden units’ computations, and their ReLU applications, in parallel, as their values are not needed at the same time up until we calculate their weighted sum at the output node.

But we don’t have to stop there. Above, you can see the same computation, but this time represented with matrix and vector multiplication operations instead. To arrive at this representation, we “augment” our input vector by adding a 1.0 to it (lighter hue), such that when we put our weights and our bias (lighter hue) in the matrix as shown above, the resulting multiplication yields the same hidden unit outputs. Then, we can apply ReLU on the output vector, element-wise, and then “augment” the ReLU output to multiply it by the weights and bias of our output layer. This representation greatly simplifies notation, as the parameters (weights and biases) of an entire hidden layer can be tucked under a single variable. But most importantly for us, it makes it clear that the inner computations of the network are essentially matrix and vector multiplication or dot products. Given how the size of these vectors and matrices scale with the dimensionality of our inputs and the number of parameters in our network, most runtime will be spent doing these sorts of calculations. A bunch of linear algebra!

Our toy example is, of course, very limited in scope. In practice, modern deep learning models can have tens if not hundreds of hidden layers, and millions of associated parameters. Instead of our two-dimensional vector input example, they can take in vectors with thousands of entries, in a variety of shapes, such as matrices (like single-channel images) or tensors (three-channel RGB images). There is also nothing stopping our matrix representation from taking in multiple inputs vectors at once, by adding rows to our original input. Neural networks can also be “wired” differently than our feedforward neural network, or execute different activation functions. There is a vast zoo of network architectures and techniques, but in the end, they mostly break down to the same parallel arithmetic operations we find in our toy example, just at a much larger scale.

Visual example of convolution layers operating on a tensor. (Image credit: Towards Data Science)

For example, the popular convolutional neural networks (CNNs) that you likely have read about are not “fully-connected” like our mock network. The “weights” or parameters of its hidden convolutional layers can be thought of as a sort of filter, a sliding window applied sequentially to small patches of an input as shown above — this “convolution” is really just a sliding dot product! This procedure results in what’s often called a feature map. Pooling layers reduce the size of an input or a convolutional layer’s output, by computing the maximum or average value of small patches of the image. The rest of the network usually consists of fully-connected layers, like the ones in our example, and activation functions like ReLU. This is often used for feature extraction in images where early convolutional layers’ feature maps can “detect” patterns such as lines or edges, and later layers can detect more complicated features such as faces or complex shapes.

All of what’s been said is strictly limited to inference, or evaluating a neural network after its parameters have been found through training which is a much more complicated procedure. And again, we’ve excluded a lot of explanations. In reality, each of the network’s components is included for a purpose. For example, those of you who have studied linear algebra can readily observe that without the non-linear activation functions, our network simplifies to a linear model with very limited predictive capacity.

An Upgraded AI Engine on the Snapdragon 865 – A Summary of Improvements

With this handy understanding of the components of a neural network and their mathematical operations, we can begin to understand exactly why hardware acceleration is so important. In the last section, we can observe that parallelization is vital to speeding up the network given it allows us, for example, to compute several parallel dot-products corresponding to each neuron activation. Each of these dot-products is itself constituted of multiply-add operations on numbers, usually with 8-bit precision in the case of mobile applications, that must happen as quickly as possible. The AI Engine offers various components to offload these tasks depending on the performance and power efficiency considerations of the developer.

A diagram of a CNN for the popular MNIST dataset, shown on stage at this year’s Snapdragon Summit. The vector processing unit is a good fit for the fully-connected layers, like in our mock example. Meanwhile, the tensor processor handles the convolutional and pooling layers that process multiple sliding kernels in parallel, like in the diagram above, and each convolutional layer might output many separate feature maps.

First, let’s look at the GPU, which we usually speak about in the context of 3D games. The consumer market for video games has stimulated development in graphics processing hardware for decades, but why are GPUs so important for neural networks? For starters, they chew through massive lists of 3D coordinates of polygon vertices at once to keep track of an in-game world state. The GPU must also perform gigantic matrix multiplication operations to convert (or map) these 3D coordinates onto 2D planar, on-screen coordinates, and also handle the color information of pixels in parallel. To top it all off, they offer high memory bandwidth to handle the massive memory buffers for the texture bitmaps overlaid onto the in-game geometry. Its advantages in parallelization, memory bandwidth, and resulting linear algebra capabilities match the performance requirements of neural networks.

The Adreno GPU line thus has a big role to play in the Qualcomm AI Engine, and on stage, Qualcomm stated that this updated component in the Snapdragon 865 enables twice as much floating-point capabilities and twice the number of TOPS compared to the previous generation, which is surprising given that they only posted a 25% performance uplift for graphics rendering. Still, for this release, the company boasts a 50% increase in the number of arithmetic logic units (ALUs), though as per usual, they have not disclosed their GPU frequencies. Qualcomm also listed mixed-precision instructions, which is just what it sounds like: different numerical precision across operations in a single computational method.

The Hexagon 698 DSP is where we see a huge chunk of the performance gains offered by the Snapdragon 865. This year, the company has not communicated improvements in their DSP’s vector eXtensions (whose performance quadrupled in last year’s 855), nor their scalar units. However, they do note that for this block’s Tensor Accelerator, they’ve achieved four times the TOPs compared to the version introduced last year in the Hexagon 695 DSP, while also being able to offer 35% better power efficiency. This is a big deal considering the prevalence of convolutional neural network architectures in modern AI use cases ranging from image object detection to automatic speech recognition. As explained above, the convolution operation in these networks produces a 2D array of matrix outputs for each filter, meaning that when stacked together, the output of a convolution layer is a 3D array or tensor.

Qualcomm also promoted their “new and unique” deep learning bandwidth compression technique, which can apparently compress data losslessly by around 50%, in turn moving half the data and freeing up bandwidth for other parts of the chipset. It should also save power by reducing that data throughput, though we weren’t given any figures and there ought to be a small power cost to compressing the data as well.

On the subject of bandwidth, the Snapdragon 865 supports LPDDR5 memory, which will also benefit AI performance as it will increase the speed at which resources and input data are transferred. Beyond hardware, Qualcomm’s new AI Model Efficiency Toolkit makes easy model compression and resulting power efficiency savings available to developers. Neural networks often have a large number of “redundant” parameters; for example, they may make hidden layers wider than they need to be. One of the AI Toolkit features discussed on stage is thus model compression, with two of the cited methods being spatial singular value decomposition (SVD) and bayesian compression, both of which effectively prune the neural network by getting rid of redundant nodes and adjusting the model structure as required. The other model compression technique presented on stage relates to quantization, and that involves changing the numerical precision of weight parameters and activation node computations.

The numerical precision of neural network weights refers to whether the numerical values used for computation are stored, transferred, and processed as 64, 32, 16 (half-precision) or 8-bit values. Using lower numerical precision (for example, INT8 versus FP32) reduces overall memory usage and data transfer speeds, allowing for higher bandwidth and faster inferences. A lot of today’s deep learning applications have switched to 8-bit precision models for inference, which might sound surprising: wouldn’t higher numerical accuracy enable more “accurate” predictions in classification or regression tasks? Not necessarily; higher numerical precision, particularly during inference, may be wasted as neural networks are trained to cope with noisy inputs or small disturbances throughout training anyway, and the error on the lower-bit representation of a given (FP) value is uniformly ‘random’ enough. In a sense, the low-precision of the computations is treated by the network as another source of noise, and the predictions remain usable. Heuristic explainers aside, it is likely you will accrue an accuracy penalty when lousily quantizing a model without taking into account some important considerations, which is why a lot of research goes into the subject

Back to the Qualcomm AI Toolkit: Through it they offer data-free quantization, allowing models to be quantized without data or parameter fine-tuning while still achieving near-original model performance on various tasks. Essentially, it adapts weight parameters for quantization and corrects for the bias error introduced when switching to lower precision weights. Given the benefits incurred by quantization, automating the procedure under an API call would simplify model production and deployment, and Qualcomm claims more than four times the performance per watt when running the quantized model.

But again, this isn’t shocking: quantizing models can offer tremendous bandwidth and storage benefits. Converting a model to INT8 not only nets you a 4x reduction in bandwidth, but also the benefit of faster integer computations (depending on the hardware). It is a no-brainer, then, that hardware-accelerated approaches to both the quantization and the numerical computation would yield massive performance gains. On his blog, for example, Google’s Pete Warden wrote that a collaboration between Qualcomm and Tensorflow teams enables 8-bit models to run up to seven times faster on the HVX DSP than on the CPU. It’s hard to overstate the potential of easy-to-use quantization, particularly given how Qualcomm has focused on INT8 performance.

The Snapdragon 865’s ARM-based Kryo CPU is still an important component of the AI engine. Even though the hardware acceleration discussed in the above paragraphs is preferable, sometimes we can’t avoid applications that do not properly take advantage of these blocks, resulting in CPU fallback. In the past, ARM had introduced specific instruction sets aimed at accelerating matrix- and vector-based calculations. In ARMv7 processors, we saw the introduction of ARM NEON, a SIMD architecture extension enabling DSP-like instructions. And with the ARMv8.4-A microarchitecture, we saw the introduction of an instruction specifically for dot-products.

All of these posted performance gains relate to many of the workloads we described in the previous section, but it’s also worth keeping in mind that these Snapdragon 865 upgrades are only the latest improvements in Qualcomm’s AI capabilities. In 2017, we documented their tripling of AI capabilities with the Hexagon 685 DSP and other chipset updates. Last year, they introduced their tensor accelerator, and integrated support for non-linearity functions (like the aforementioned ReLU!) at the hardware level. They also doubled the number of vector accelerators and improved the scalar processing unit’s performance by 20%. Pairing all of this with enhancements on the CPU side, like those faster dot-product operations courtesy of ARM, and the additional ALUs in the GPU, Qualcomm ultimately tripled raw AI capabilities as well.

Practical Gains and Expanded Use-Cases

All of these upgrades have lead to five times the AI capabilities on the Snapdragon 865 compared to just two years ago, but perhaps most importantly, the improvements also came with better performance per milliwatt, a critical metric for mobile devices. At the Snapdragon Summit 2019, Qualcomm gave us a few benchmarks comparing their AI Engine against two competitors on various classification networks. These figures look to be collected using AIMark, a cross-platform benchmarking application, which enables comparisons against Apple’s A-series and Huawei’s HiSilicon processors. Qualcomm claims that these results make use of the entire AI Engine, and we’ll have to wait until more thorough benchmarking to properly disentangle the effect of each component and determine how these tests were conducted. For example, do the results from company B indicate CPU fallback? As far as I’m aware, AIMark currently doesn’t advantage of the Kirin 990’s NPU on our Mate 30 Pro units, for example. But it does support the Snapdragon Neural Processing Engine, so it will certainly take advantage of the Qualcomm AI Engine; given it is internal testing, it’s not explicitly clear whether the benchmark is properly utilizing the right libraries or SDK for its competitors.

It must also be said that Qualcomm is effectively comparing the Snapdragon 865’s AI processing capabilities against previously-announced or released chipsets. It is very likely that its competitors will bring similarly-impactful performance improvements in the next cycle, and if that’s the case, then Qualcomm would only hold the crown for around half a year from the moment Snapdragon 865 devices hit the shelves. That said, these are still indicative of the kind of bumps we can expect from the Snapdragon 865. Qualcomm has generally been very accurate when communicating performance improvements and benchmark results of upcoming releases.

All of the networks presented in these benchmarks are classifying images from databases like ImageNet, receiving them as inputs and outputting one out of hundreds of categories. Again, they rely on the same kinds of operations we described in the second section, though their architectures are a lot more complicated than these examples and they’ve been regarded as state of the art solutions at their time of publication. In the best of cases, their closest competitor provides less than half the number of inferences per second.

In terms of power consumption, Qualcomm offered inferences per watt figures to showcase the amount of AI processing possible in a given amount of power. In the best of cases (MobileNet SSD), the Snapdragon AI Engine can offer double the number of inferences under the same power budget.

Power is particularly important for mobile devices. Think, for example, of a neural network-based Snapchat filter. Realistically, the computer vision pipeline extracting facial information and applying a mask or input transformation only needs to run at a rate of 30 or 60 completions per second to achieve a fluid experience. Increasing raw AI performance would enable you to take higher-resolution inputs and output better looking filters, but it might also simply be preferable to settle for HD resolution for quicker uploads and decrease power consumption and thermal throttling. In many applications, “faster” isn’t necessarily “better”, and one then gets to reap the benefits of improved power efficiency.

During Day 2 of the Snapdragon Summit, Sr. Director of Engineering at Snapchat Yurii Monastyrshyn took the stage to show how their latest deep learning-based filters are greatly accelerated by Hexagon Direct NN using the Hexagon 695 DSP on the Snapdragon 865.

On top of that, as developers get access to easier neural network implementations and more applications begin employing AI techniques, concurrency use cases will take more of a spotlight as the smartphone will have to handle multiple parallel AI pipelines at once (either for a single application processing input signals from various sources or as many applications run separately on-device). While we see respectable power efficiency gains across the compute DSP, GPU, and CPU, the Qualcomm Sensing Hub handles always-on use cases to listen for trigger words at very low power consumption. It enables monitoring audio, video and sensor feeds at under 1mA of current, allowing the device to spot particular sound cues (like a baby crying), on top of the familiar digital assistant keywords. On that note, the Snapdragon 865 enables detecting not just the keyword but also who is speaking it, to identify an authorized user and act accordingly.

More AI on Edge Devices

These improvements can ultimately translate into tangible benefits for your user-experience. Services that involve translation, object recognition and labeling, usage predictions or item recommendations, natural language understanding, speech parsing and so on will gain the benefit of operating faster and consuming less power. Having a higher compute budget also enables the creation of new use cases and experiences, and moving processes that used to take place in the cloud onto your device. While AI as a term has been used in dubious, deceiving and even erroneous ways in the past (even by OEMs), many of your services you enjoy today ultimately rely on machine learning algorithms in some form or another.

But beyond Qualcomm, other chipset makers have been quickly iterating and improving on this front too. For example, the 990 5G brought a 2+1 NPU core design resulting in up to 2.5 times the performance of the Kirin 980, and twice that of the Apple A12. When the processor was announced, it was shown to offer up to twice the frames (inferences) per second of the Snapdragon 855 at INT8 MobileNet, which is hard to square with the results provided by Qualcomm. The Apple A13 Bionic, on the other hand, reportedly offered to six times faster matrix multiplication over its predecessor and improved its eight-core neural engine design. We will have to wait until we can properly test the Snapdragon 865 on commercial devices against its current and future competitors, but it’s clear that competition in this space never stays still as the three companies have been pouring a ton of resources into bettering their AI performance.

The post How Qualcomm Brought Tremendous Improvements in AI Performance to the Snapdragon 865 appeared first on xda-developers.

from xda-developers https://ift.tt/34rDl55

via

IFTTT

https://t.co/22Vb4Gr0Zk

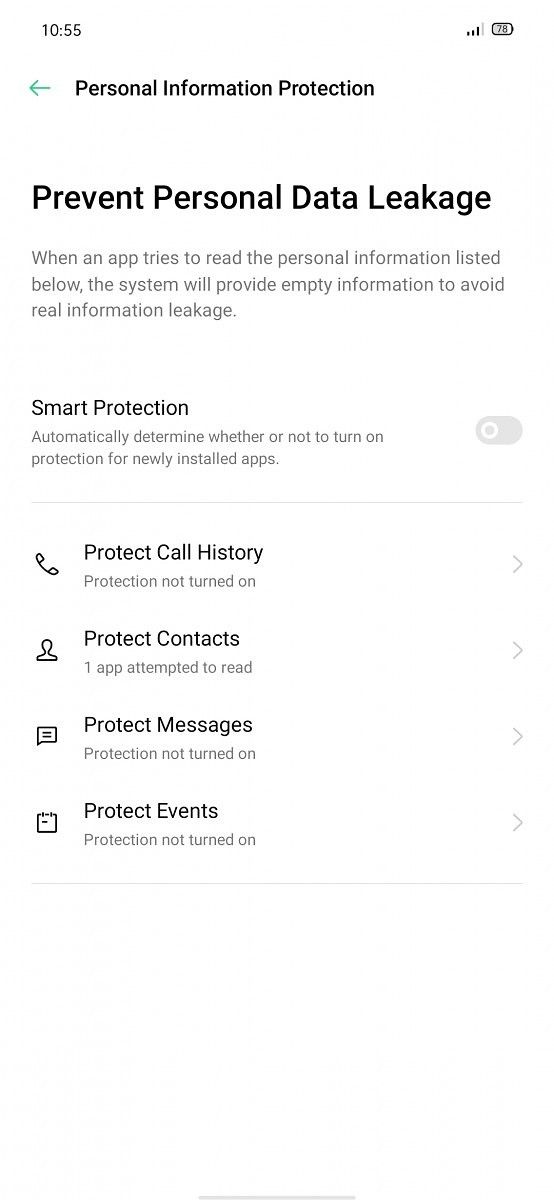

In terms of imaging additions, the camera app of ColorOS 7 is visually similar to that of ColorOS 6.0, but comes with functional improvements. Specifically, it has a new Ultra Night Mode. This does prove its worth in the OPPO Reno 10x Zoom by improving image quality to the point where the night mode is a serious competitor for

In terms of imaging additions, the camera app of ColorOS 7 is visually similar to that of ColorOS 6.0, but comes with functional improvements. Specifically, it has a new Ultra Night Mode. This does prove its worth in the OPPO Reno 10x Zoom by improving image quality to the point where the night mode is a serious competitor for